Divergence Mapping, Mark II

By Ben Nitkin on

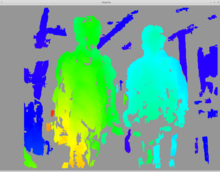

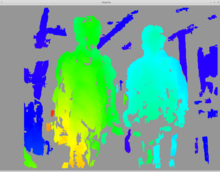

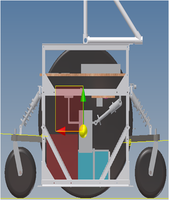

Mark II sounds technical. So is this post. In the last post, I described how divergence mapping works. Fundamentally, divergence mapping creates a 3d image using two cameras, much like the human eye. The link above goes into more detail on our high-level method; this post is about hardware.

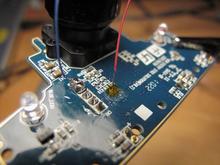

Here, you'll learn how to modify a PS3 eye for synchronized images. (Apologies, but I'm shy on pictures of the camera coming apart.)

First, remove all of the screws from the camera's backside. They're all hidden under the round rubber pads, which will pry off with a slotted screwdriver. The screws themselves are all Phillips.

Next, gently pry the back off of the camera. The board and lens are attached to the front piece of plastic; the back will pull off. The two sides are attached with plastic hooks. I found that needling a slotted screwdriver between the layers of plastic and then twisting worked well. Start at the top, and save the round bottom for last (it's tough to get open).